Optical Computing: Light and the Future of Computing

Modern silicon-based computing is running into some serious problems. Mainly, a limit. This limit is based on the size of transistors that we use; once they reach a certain size, electrons are too large to be useful. States become indistinguishable, the Heisenberg uncertainty principle makes things very uncertain, and the chip gets so hot that it breaks down.

We briefly mentioned this in our article on 3D-NAND, the basis of which is that we decided to build upwards instead of shrinking transistor size even more. Even with a third dimension, we will still reach a limit to our data storage density eventually, and likely in the near future.

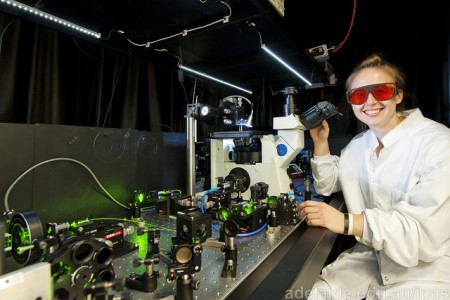

Moore’s Law states the number of transistors on a computer chip doubles every eighteen months. If this trend is to continue, we must look to alternative methods of data storage and transfer. The topic of this article is computational photonics/optical computing.

Photonics? What’s that?

First off, let’s look at the word photonics vs. electronics. We know silicon-based computing uses electrons to perform logic. Photonics uses photons, the elementary particles of light, in place of electrons. Using photons has some advantages.

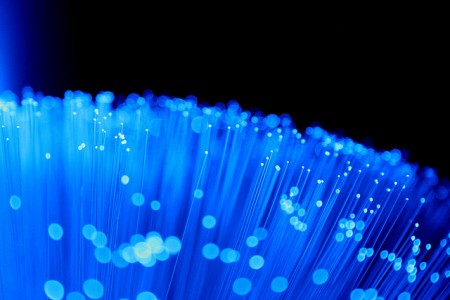

Since the speed of light is what we might call the top speed of the universe (for the most part), it makes sense to use light as an information carrier. We would be able to transfer data sets over vast distances in no time at all, since light is capable of traveling around the world about 7.5 times per second.

Fiber optics uses light as an information carrier, and it’s one of the reasons we have seen ISP’s branching into fiber, such as Google Fiber, though only select cities have been graced with it so far.

Without launching into a techno-babble explanation that neither you nor I will understand, here are some basic advantages scientists think optical computing can bring to the table:

- Small in size

- Increased speed

- Scalable to large or small networks

- Less power consumption (debatable, depends on the length of transmission)

- Low heat

- Complex functions performed quickly/simultaneously (photons rarely interact with each other, thus many different beams, signifying different packets of information, could be sent at the same time in the same space)

Optoelectronics

Optoelectronic computers are much more realistic than entirely optical computers, which is a hybrid computer using photonic components integrated with contemporary silicon-based components. We already have prototypes of optoelectronic chips.

It provides some of the advantages of optical computing without completely overhauling the design, so that at least some of the currently available photonic technologies can be utilized. Unfortunately, having to convert from binary to light pulses and back again greatly reduces speed and increases energy consumption.

This is what makes purely optical computers so tantalizing. Without conversion, the information travels near the speed of light the whole way.

Disadvantages

There are some drawbacks to optical computers.

The first glaring disadvantage is that we are likely still a long ways off from having a fully functional, scalable, and commercially available optical computer. Unless there is a drastic breakthrough (yes, it’s possible), we still have years and millions of dollars in research ahead of us.

Another is that optical fibers are generally much wider than electrical traces. It seems that most components are currently much larger than their electrical counterparts, though this isn’t surprising given the relative youth of optical computing compared to silicon-based computers.

Finally, we don’t have the software to run these computers even if we do manage to create them. Once we get closer to creating a function optical computer, I’m sure there will be plenty of developers working on it. For now, we just sit and wait.

Looking Forward

Despite all this information, there’s no guarantee that optical computers will ever become a big thing.

Scientists are researching molecular computing and quantum computing alongside optical computing, both of which have major potential. Quantum computing in particular has been called “the hydrogen bomb of cyber warfare,” given its potential for solving incredibly complex equations with massive numbers extremely quickly. It would render current methods of encryption useless.

Just like with optical computing, both molecular and quantum present their own barriers to development and have a long way to go before they are very useful.

Until such a time, we’ll have to continue development of 3D NAND to keep up with Moore’s Law. With more research and a lot of luck, we will hopefully see breakthroughs in the next ten to fifteen years regarding these various alternative methods of computing. By that time, Gillware will have to understand the complications involved in data loss for optical computing. While we don’t currently perform data recoveries on optical computers, we would love to help if you have an electronic one.