Life Lessons in Data Loss from GitLab and Gillware

GitLab is a hosting tool for source code, allowing programmers to work together on projects and collaborate from all around the world. Over 100,000 organizations, including IBM, SanDisk, and NASA make use of GitLab so their workers can collaborate easily and efficiently.

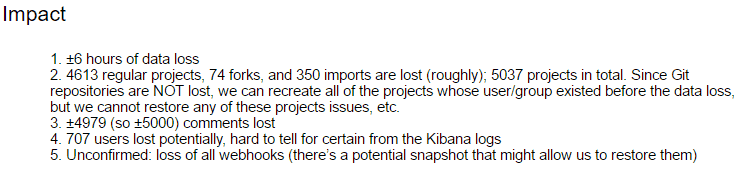

On January 31, 2017, GitLab was struck by a serious production database issue. An effort to deal with a sudden rise in spammer activity culminated in the accidental deletion of around 300 gigabytes of data from the production database. GitLab’s sysadmins ended up forced to restore the database from a six-hour-old backup. This was less-than-ideal because, as we all know, a lot can happen in six hours—especially when your product is being used by people all around the world.

It Can Happen to Anyone

There are a lot of phrases to describe these kinds of incidents. “Sh*t happens” is one. “Pobody’s nerfect” is another. When you’re managing hundreds of gigabytes of data, created by thousands of users from all four corners of the map, a single wrong move can make a bad situation worse.

Nobody, no matter how big, is immune to disaster. Even Pixar, a multi-million dollar company, nearly lost an entire movie when somebody ran the RM -RF command in Linux and accidentally told their computers to start emptying themselves as quickly as possible. Artists and programmers watched in horror as hours of hard work vanished with every passing second.

Fortunately, though, Pixar had an unlikely offsite backup. Their film, Toy Story 2, was able to make its November 1999 release date as scheduled. It went on to win a Golden Globe for Best Motion Picture in 2000.

If it can happen to Pixar, if it can happen to GitLab, it can happen to you.

To their credit, GitLab Inc’s response to this issue has been nothing short of exemplary. They wasted no time alerting their users to what had happened. Within a few hours they had already published a comprehensive look at the exact chain of events that caused this issue. They even went so far as to livestream their database restoration work. By February 1, GitLab was once again available to the public.

Life Lessons in Data Loss from GitLab and Gillware

Right now, GitLab Inc is asking itself some very hard questions about what it can do to prevent these kinds of data disasters in the future. Now is the perfect time for you to ask yourself those same kinds of questions as well.

Have some advice from Gillware about backing up your data:

Your data should never live in just one place.

Users of GitLab have all of their projects hosted both locally and in GitLab’s database. GitLab is a sort of “cloud” for programming projects. Users working on collaborative projects edit their projects on their own workstations and push the changes to GitLab, allowing their fellows to see the updates. This is just the kind of backup that you need to protect your data in the event of a disaster. As long as you keep your important files in more than one location, you won’t be left high and dry if one location becomes compromised.

GitLab losers didn’t lose any programming work. But what they did lose was almost as important. When GitLab had to restore its production database from a six-hour-old database, any changes to the metadata on GitLab users’ projects made over those past six hours vanished. This metadata included things like new comments, issue tickets logged for GitLab technical support, new users added to projects, wiki posts… all of the things that make collaborative projects tick.

Test your backups, and test them often.

There’s no use in having a backup that doesn’t work. The GitLab team found this out the hard way. When disaster struck, they found—too late—that their backup and replication techniques were far less reliable than they had supposed (fortunately, by documenting their situation so thoroughly, we can all learn from their mistakes).

We here at Gillware have received many a server in our data recovery lab after its owner incorrectly assumed that their backup system would save them in the end. Far too many people, as well, lapse into forgetting to maintain their backups. This is especially a problem with RAID-5 servers because their fault tolerance can lull people into a false sense of security.

You don’t want to get into the habit of treating data backups as a “set it and forget it” deal. Data backup isn’t just about software—it’s a service. Auditing and maintaining backups is a full-time job.

GitLab’s Response – The Beauty of Transparency

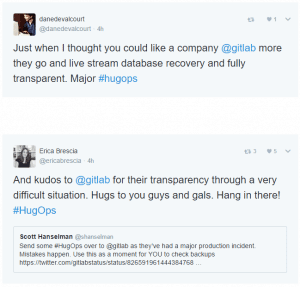

GitLab’s commitment to transparency, for better or for worse, should be commended. It’s easy to be transparent and give credit where credit is due when you’re doing well. In the wake of a disaster like this, the temptation to sweep details under the rug, or at least be a little cagey about the whole affair, must be overwhelming.

But the most important thing about transparency—and why it’s so important to commit to it, rain or shine—is that it just helps build trust. We all love honest people. The truest measure of honesty is how willing you are to stand up and admit that you made a mistake. In this difficult time, the people running GitLab were outstandingly honest, open, transparent, and humble.

If GitLab Inc had been less forthcoming about the details of this disaster, or less willing to take responsibility and deal honestly with their userbase, the public fallout would have been worse than any amount of lost data. Resentment and mistrust would quickly foment among GitLab’s users.

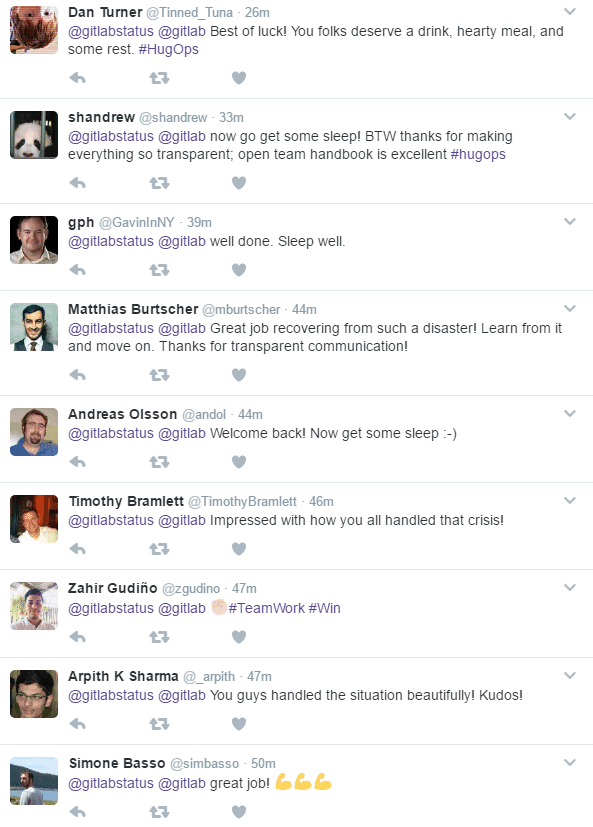

But the public response to GitLab’s situation has been incredibly sympathetic. Within a few hours of the incident, programmers and IT professionals started expressing their sympathy and condolences, as well as their admiration for GitLab Inc’s honesty and transparency, using the hashtag #HugOps. It was beautiful—like watching the town come together to help George Bailey at the end of It’s a Wonderful Life. When GitLab came back online, the response was overwhelming:

We know all too well how much it hurts when a disaster costs you your data. As GitLab recovers and moves on, we here at Gillware join hundreds in wishing them the best in their future endeavors.